Deep Learning: Image Analysis

FF03 · ©2015 Cloudera, Inc. All rights reserved

This is an applied research report by Cloudera Fast Forward. Originally published in 2015, it features a lot of continually relevant information on how neural networks can be used to interpret images. If you would like more current information on the state of the industry, be sure to check out our updated report on image analysis.

Read the full report below or download the PDF.

Introduction

Imagine fixing your car by taking a picture of your engine and having an AI mechanic guide you through the repairs. Or a machine that looks at a rash or bug bite and tells you whether it needs professional attention. Or maybe a program that looks at your garden and warns you which plants are at risk of dying. These ideas may sound like science fiction, but they are now becoming feasible. Recently, we’ve seen massive progress in the development of systems that can automatically identify the objects in images using a technique known as Deep Learning. This is a breakthrough capability.

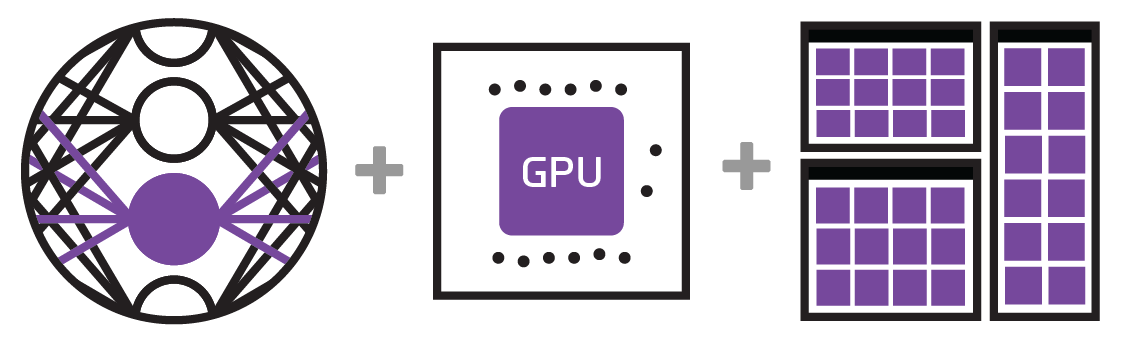

These systems are emerging now, due to multiple factors. First, there’s been strong progress in our theoretical understanding of artificial neural networks. Neural networks are computational systems made up of individual, interconnected processing nodes that adapt to new input. While they’ve been around since the 1950s, this recent progress has opened up entirely new applications.

Second, graphical processing unit (GPU) computation has become affordable. GPUs were primarily developed for video gaming and similar applications, but are also optimized for exactly the kinds of operations that neural networks require.

Finally, large image and video datasets are now available. This, more than anything, has motivated and enabled significant progress in both research and industry applications. The result is that we are now able to build affordable systems that can analyze rich media (images, audio, and video) and automatically classify them with high accuracy rates.

This has strong implications for anyone building data processing and analytics systems. Current approaches are, out of necessity, largely limited to analysis of text data. This limitation (frequently glossed over by many analytics products) comes from the fact that images can be permuted in many more ways than sentences. Consider that the English language only contains roughly 1,022,000 words, yet each pixel from an image can take on any of 16,777,216 unique color values. Moreover, a single 1024 x 768-pixel image contains as many pixels as Shakespeare had words in all of his plays!

Neural networks, however, are fantastic at dealing with large amounts of complex data because of their ability to internally simplify and generalize their inputs. Already, with high accuracy, we are able train machines to identify common objects in images. It’s exciting to think that we are now able to apply the same kinds of analyses that we’ve been doing on text data to data of all types.

The structure of neural networks was initially inspired by the behavior of neurons in our brains. While the brain analogy is a romantic one, the relationship between these systems and the human brain stops there. These machines do a very good job of solving very specific problems but are not yet able to approach generalized intelligence. We don’t need to worry about the Terminator just yet.

In this report we explore Deep Learning, the latest development in multilayered neural networks. These methods automatically learn abstract representations of their training data to perform some sort of classification or regression task, where you are training a system to look at examples of data with labels and apply those labels to new data. While Deep Learning has implications for many applications, we focus specifically on image analysis because this is one domain where similar results cannot currently be achieved using more traditional machine learning techniques. Deep Learning represents a substantial advancement in image object recognition.

Neural network-based image recognition systems have actually been used in the wild for quite some time. One of the first examples of neural networks applied in a product is the system that recognizes the handwriting on checks deposited into ATMs, automatically figuring out how much money to add into any account.

Image analysis is just the beginning for Deep Learning. In the next few years, we expect to see not only apps that can look at a photo of leaky plumbing or a damaged car and guide you through the repairs, but also apps that offer features such as realtime language translation in videoconferencing and even machines that can diagnose diseases accurately.

Neural Networks

Neural networks have been around for many years, though their popularity has surged recently due to the increasing availability of data and cheap computing power. The perceptron, which currently underpins all neural network architectures, was developed in the 1950s. Convolutional neural networks, the architecture that makes neural image processing useful, were introduced in 1980. However, recently new techniques have been conceived that allow the training of these networks to be possible in a reasonable amount of time and with a reasonable amount of data. These auxiliary algorithmic improvements, in addition to computational improvements with GPUs, are why these methods are only now gaining popularity. Moreover, these methods have proven themselves to excel at extracting meaning from complex datasets in order to properly classify data we didn’t think could be algorithmically classified before.

In this section we’ll look at the basic concepts needed to understand how neural networks function and the recent advances that have greatly expanded their possible applications.

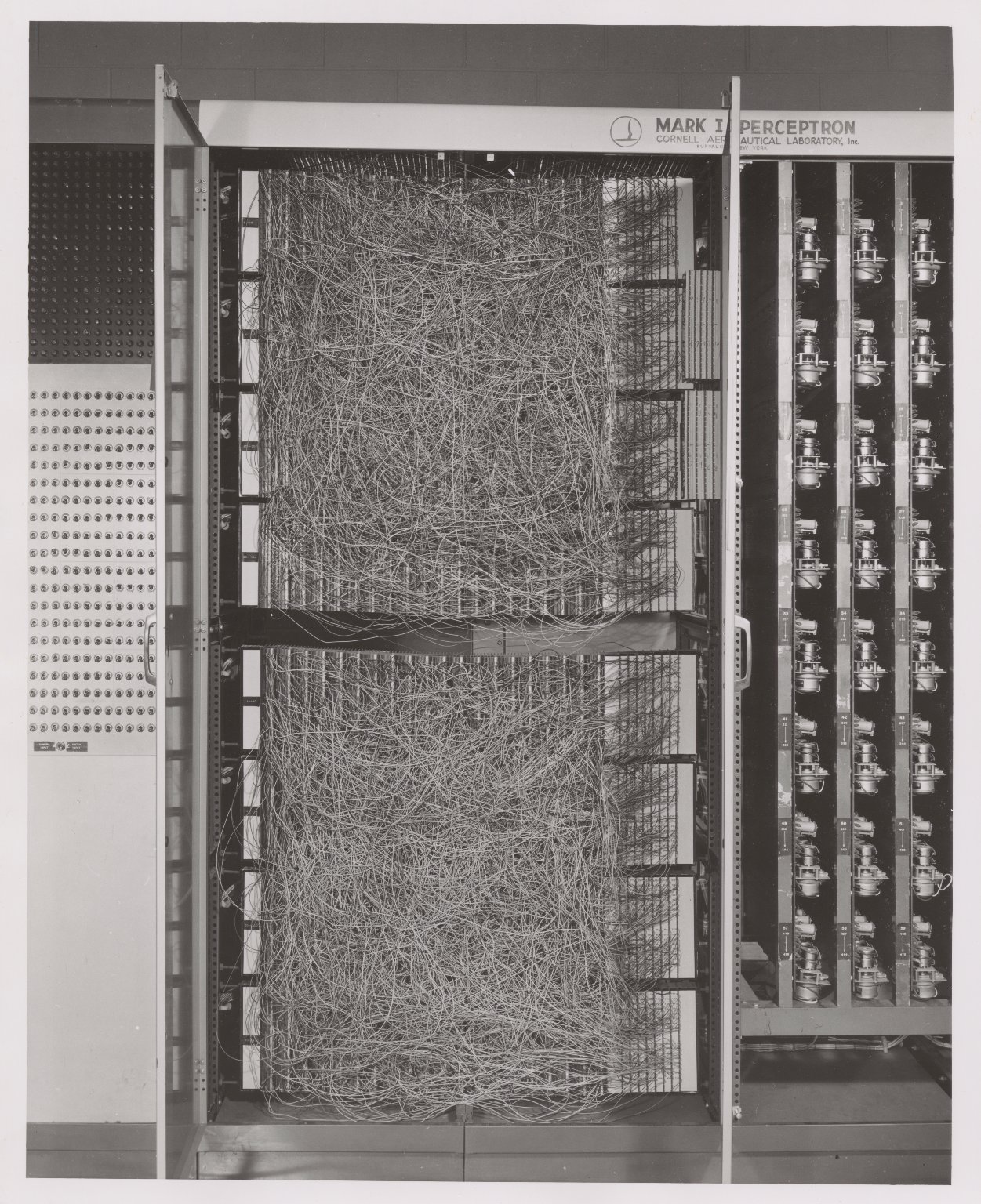

The Perceptron

The basic elements of a modern neural network – neurons, weighted connections, and biases – were all present in the first neural network, the “perceptron,” invented by Frank Rosenblatt at Cornell Aeronautical Labs in 1958. The design was grounded in the theory laid out in Donald Hebb’s The Organization of Behavior, which gave a method for quantifying the connectivity of groups of neurons through weights. The initial technology was an array of photodiodes making up an artificial retina that sent its “visual” signal to a single layer of interconnected computing units with modifiable weights. These weights were summed to determine which neurons fired, thus establishing an output signal.

Rosenblatt told the New York Times that this system would be the beginning of computers that could walk, talk, see, write, reproduce themselves, and be conscious of existence. This field has never lacked imagination! Soon researchers realized that these statements were exaggerated given the state of the current technology – it became clear that perceptrons alone were not powerful enough for this sort of computing. However, this did mark a paradigm shift in AI research where models would be trained (non-symbolic AI) instead of working based on a set of preprogrammed heuristics (the Von Neumann architecture).

As the simplest versions of neural networks, understanding how perceptrons operate will provide us insight into the more complex systems popular today. The features of modern networks can be viewed as solutions to the original limitations of perceptrons.

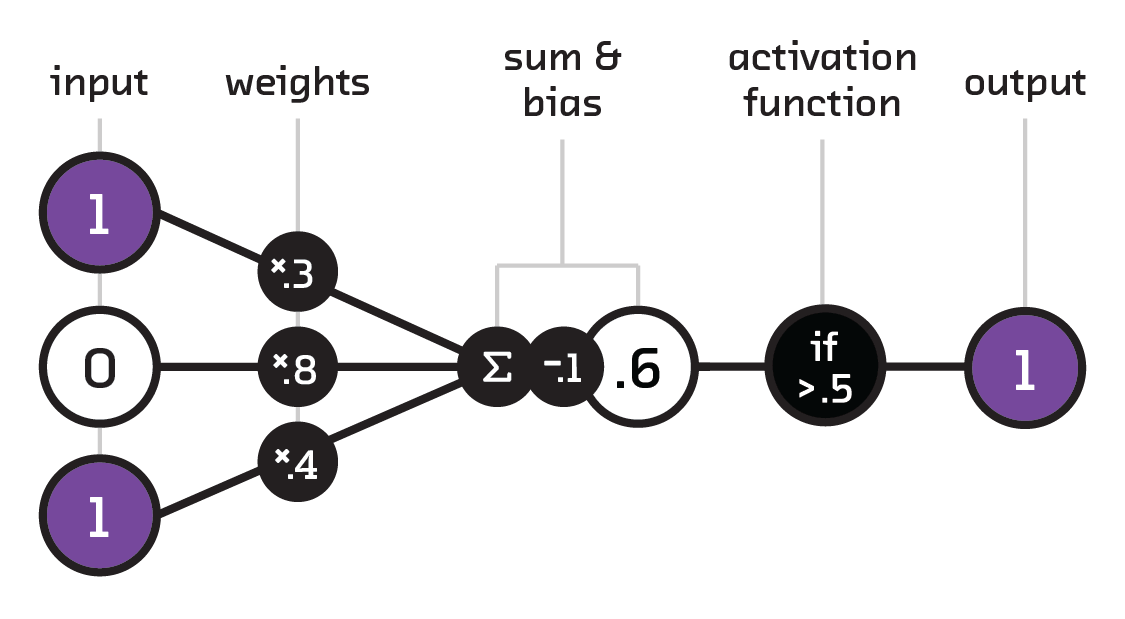

To understand neural networks, we must first unpack the basic terminology: individual computational units (neurons) are connected (i.e., pass information), such that each connection has a weight and each neuron a bias. A number that is passed to a neuron via a connection is multiplied by the weight of that particular connection, summed together with the other inbound connections, and adjusted by the neuron’s bias. This result is passed to an activation function that determines whether the neuron “fires” or not. An active, or fired, neuron passes the result on. If the result does not meet the activation threshold, then it is not passed on.

Perceptrons are simple binary classifiers that use the above computational

components, take in a vector input (see <

The term neuron stems from the biological motivation behind neural nets. A primary property of a brain is its ability to wire (and rewire) neurons together so that, given some input signal (e.g., sound in your ear), groups of neurons will fire together and activate different brain regions, leading to a nervous system or other response. Neurons inside the brain receive input voltages from many connections, but only fire if the current is strong enough to pass across the synapse and carry the electrical signal to them. Similarly, the weights in neural networks allow us to bias certain input connections more than others to extract the relevant features.

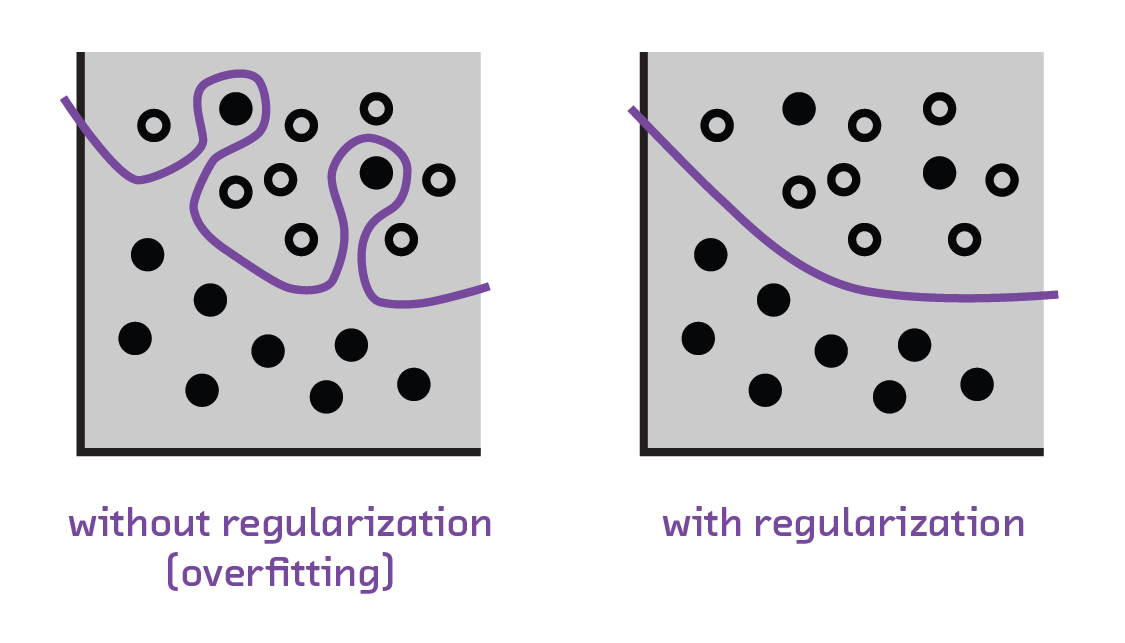

Input Vectors

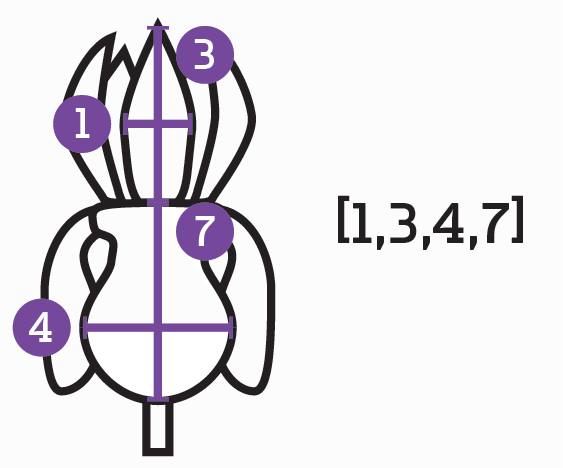

Like most machine learning models, neural networks require an input vector to process. An input vector is a way of quantifying an input as a series of numbers. Neural networks operate by passing this input through layers of neurons that transform the input vector into your desired output.

If we wanted to quantify the properties of a flower as an input vector, we could form a list of numbers describing the flower’s height, the length of the petals, three values for the color (one for each of the red/green/blue values), etc. footnote:[This exact example is part of a classic “hello world” dataset for machine learning called the Iris Dataset.] To quantify words the bag of words approach is generally used, where we create a “master” list in which every possible word has a position (e.g., “hello” could be the 5th word, “goodbye” could be the 29,536th word). Any given passage of text can be quantified using this approach by simply having a list of 0s and 1s, where a 1 represents that that word is present in the passage. An image, on the other hand, is already a quantification of a visual scene – computer image formats are simply 2D lists of pixels, which are just numbers representing the RGB values. However, when creating a vector out of them, we must discard the 2D nature of the data and turn it into a flat list, thus losing any spatial relationships between the pixels.

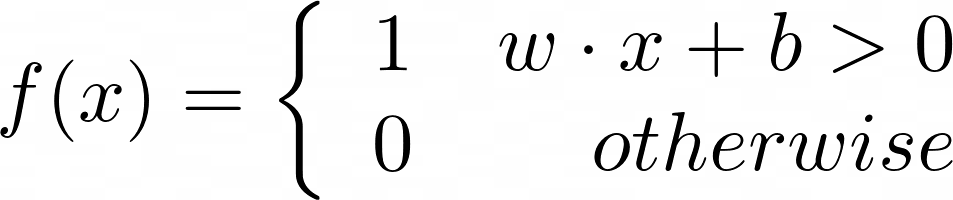

What makes a perceptron interesting is how it handles weights. To evaluate a perceptron, we multiply each element of the input with a series of weights and then sum them; if the value is above a certain activation threshold, the output of the perceptron is “on.” If the value is below the threshold, the output is “off”:

In this formulation, w encodes the weights being used in the calculation and

is a vector with the same size as the input, x. There is also a bias (also

called a threshold), which is simply a constant number. The result of the

function f(x) defines the classification. That is to say, if we train our

system such that 0 means dog and 1 means cat, then f(a)=0 means that the

data in the vector a represents a dog and f(b)=1 means that b represents a

cat.

While training perceptrons is much simpler than the training regimes we will soon get into, they still do need their weights tuned in order to be useful. For this, we must define a cost function, which essentially defines how far we are from our desired output. We will go into this in more detail soon; for now, it’s useful to know that we are attempting to converge on a result that minimizes our cost function by slowly changing our weights.

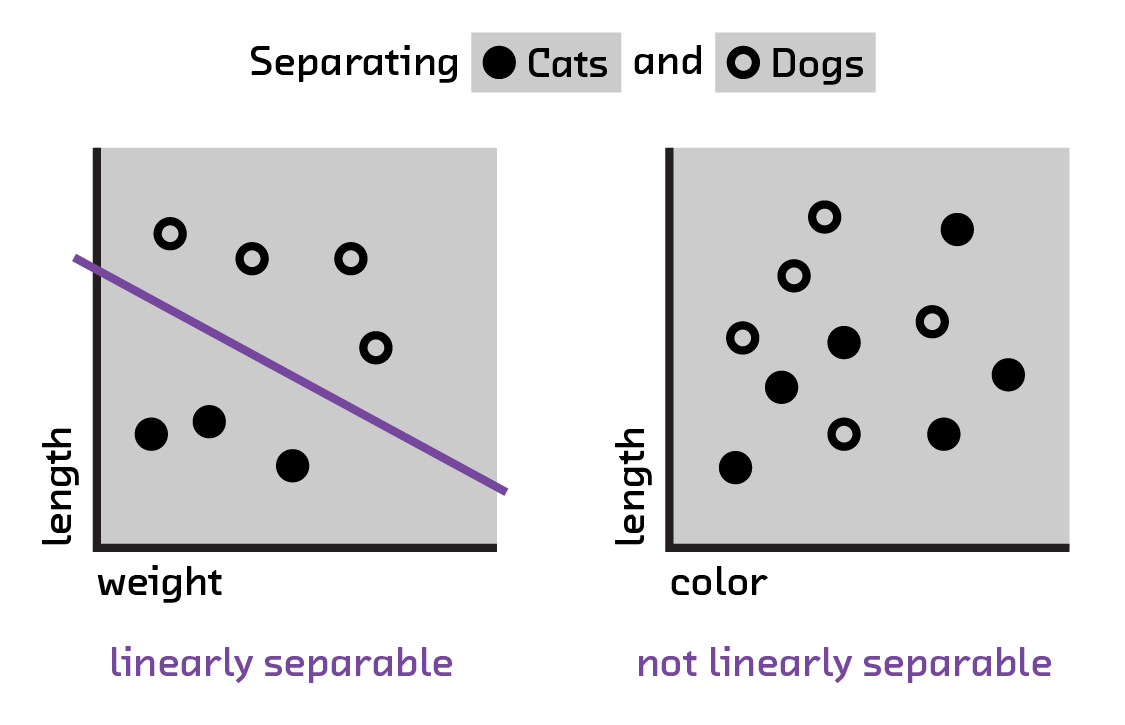

The weights in a perceptron describe a linear function that separates the input parameter space into two sections describing the two possible classifications of the system. As a result, only linearly separable problems can be solved. What we mean by separability is that our parameter space (all features encoded in our input vector) has the capability of having a line drawn through it, which at those values creates a boundary between classes of things. This quickly limits the effectiveness of perceptrons when applied to more complicated classification problems.

=== Feed-Forward Networks: Perceptrons for Real Data ===

As a result of the single layer peceptron being limited to linearly separable problems, researchers soon realized that it could only solve toy problems in its original formulation. What followed were a series of innovations that transformed the perceptron into a model that is still to this day the bread and butter of neural networks: the feed-forward network. This involved the modification of most of the original components, while retaining the underlying theory of Hebbian learning that originally motivated the perceptron’s design.

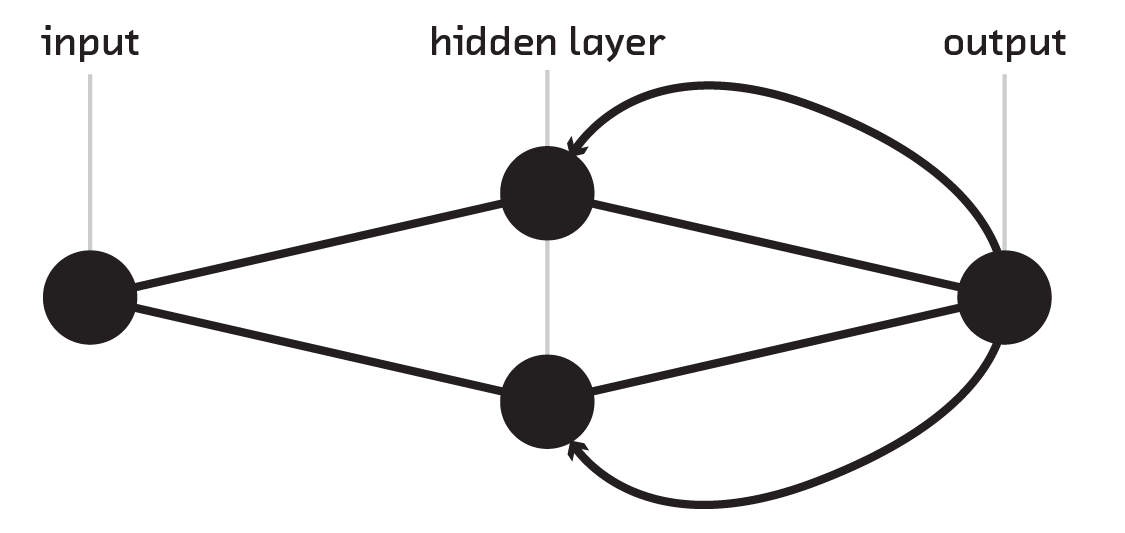

The feed-forward neural network is the simplest – but most widely used – type of neural network. The model assumes a set of neurons with an arbitrary threshold value and connections to the next set of neurons. The first set of neurons perform a weighted summation of their input; then, that signal is fed forward to the next layer. As connections are unidirectional toward the next layer, the resulting network has no potential cycles, which simplifies the training procedure.

To understand how these networks work, we’ll first need to amend a few of our basic concepts from the perceptron.

==== Nonlinear Activation and Multiple Layers ====

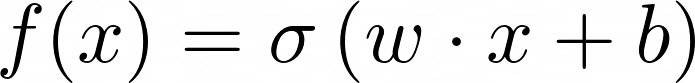

Nonlinear activation functions change several things from the perceptron

model. First, the output of a neuron is no longer only 0 or 1, but any value

from 0 to 1. This is achieved by replacing the piece-wise function that defines

f(x) in the perceptron model with:

where σ is the chosen nonlinear function.[1]

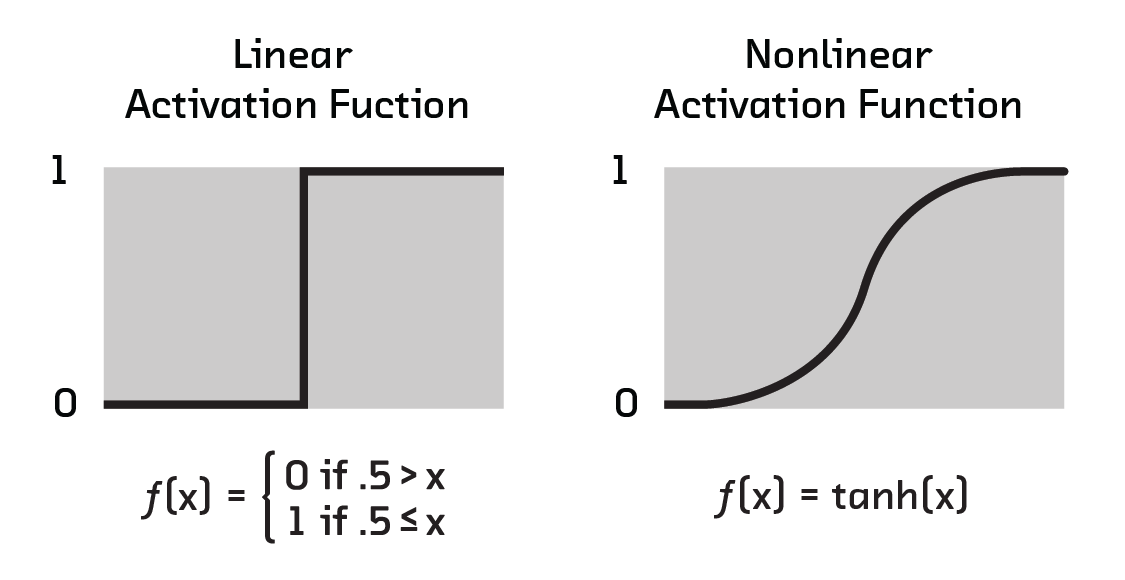

Next, stacking perceptrons allows for hidden layers, at the computational cost of

many more weights. Here, every node in the new layer gets its value by

evaluating f(x) with its own set of weights. As a result, the connection

between a layer of N nodes and M nodes requires M weight vectors of size

N, which can be represented as a matrix of size N x M.

Having multiple layers opened up the possibility of multiclass classification – i.e., classifying more than two items (the limit of the perceptron). The final layer can contain multiple nodes, one for each class we want to classify. The first node, for example, can represent “dog,” the second “cat,” the third “bird,” etc.; the values these nodes take represent the confidence in the classification.

Of course, this introduces the complexity of selecting the correct classification. Should we simply accept the class with the highest confidence, a maximum likelihood approach? Or should we adopt another method (Bayes), where the set of probabilities across all possible classes informs a more sophisticated choice. Maximum likelihood is generally accepted in practice however, in our prototypes we explore alternate methods to make low-confidence classifications useful.

Crucial to these advancements is that they allow classifications of datasets that are not linearly separable. One way to think about this is that the hidden layers perform transformations on the space to form linearly separable results. But the reality is slightly more complicated. In fact, with the addition of nonlinearity, a feed-forward neural network can act as a universal approximator. That is to say, nonlinearity enables a neural network to model any function, with the accuracy proportional to the number of neurons. Adding multiple layers makes it easier to attain high-accuracy models and reduces the total number of required nodes.

Now it begins to come together how adding more layers actually escalates our power to model, classify, and predict. However, why is it that neural networks are so much better then traditional regression and hierarchical modeling? We’ve mentioned before that we train our models; now, let’s take a look at how this is done.

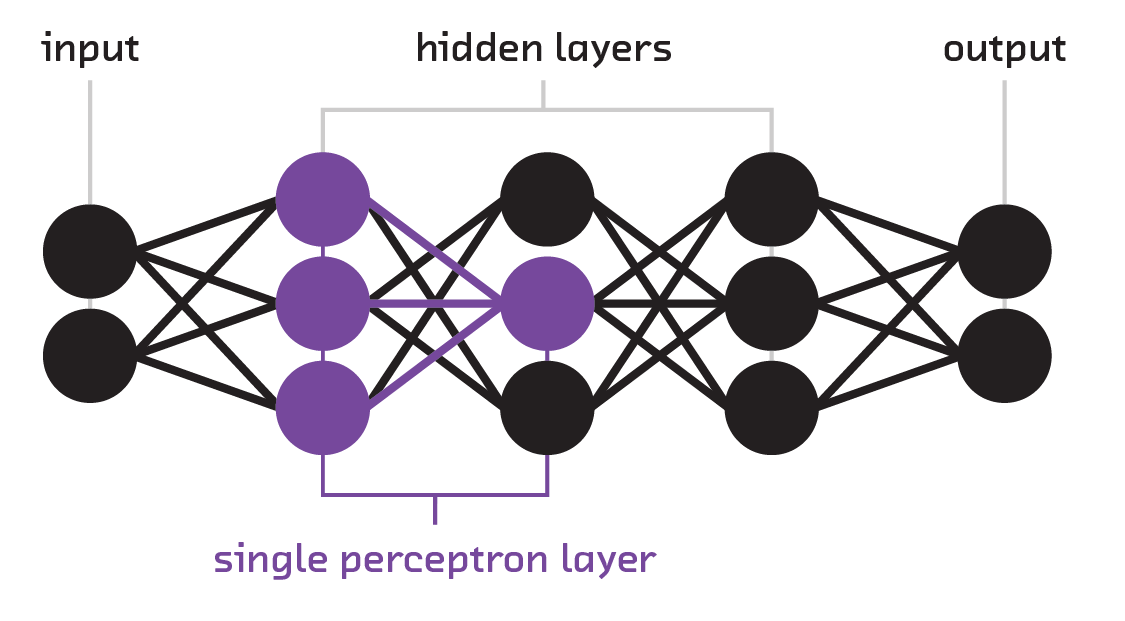

Backpropagation

While these models seem fantastic, it was generally possible to train comparable models on small datasets using classic regression techniques. The real breakthrough for neural networks was in the learning or training procedure: backpropagation. This piece of the puzzle is the reason why neural networks outmuscled previous methods.

Backpropagation is an optimization technique for models running on labeled data (also known as supervised learning). While this algorithm had been known for quite a long time, it was only first applied to neural networks in 1986. In this technique, data is fed through a randomly initialized network to identify where the network gets things wrong. This error is then “backpropagated” through the network, making subtle changes to gently nudge the weights toward better values. The goal of this training is to craft our weights and biases to transform our input vector, layer by layer, into a separable space (not necessarily linearly separable) where it can be classified. This is done with successive use of the chain rule, which can be thought of as iteratively seeing how much a given weight contributed to a particular result and using the calculated error to correct the problem.

Think of a musician who is playing electric guitar on a new amp for the first time. Her goal will be to make the tonality clear and the distortion appropriate for the song or style, even though the amp’s settings are initially random. To do this, she’ll play a chord with her sound quality goal in mind and then start fiddling with each knob on the amp: gain, mid, bass, etc. By seeing how each knob relates to the sound and repeatedly playing the chord, adjusting, and deciding how much closer she has gotten to her goal, she will do a kind of training. Listening to the chord is like evaluating the objective function, and tuning the knobs is like minimizing the cost function.

While the exact description of the algorithm is outside the scope of this report,[2] there are several

considerations to keep in mind. First, the error that is propagated through the

network is based on the cost function (also known as the loss function).

The cost function defines “how wrong” the neural network was in a given

prediction. For example, if a network was supposed to predict “cat” for a given

image but instead says it thinks 60% it was a cat and 40% that it was a dog, the

loss function would determine how much to penalize the network for the imperfect

output. This is then used to teach the network to perform better in the future.

There are many possible choices for a loss function, and each one penalizes the

network differently based on how incorrect it was versus the correct

output.footnote:[Common loss functions are categorical cross entropy, mean

squared error, mean absolute error, and hinged.] As we’ll see

in <

Second, backpropagation is an iterative algorithm and has a parameter, the

learning rate, that determines how slowly it should change the weights. It is

always advised to start with a small learning rate (generally .001 is used:

if a change of 5.0 would precisely fix the error in the network, a

change of .005 is applied). A small learning rate is crucial to avoid

overfitting the model to the training data because it limits the memorization of

particular inputs. Without this, our networks would learn only features specific

to the training set and would not learn generalization. By limiting the amount the

network can learn from any particular piece of data, we increase the ability of

the network to generalize. This is such an important piece of neural networks

that we even go as far as modifying the cost function and truncating the network

to help with this generalization.

Furthermore, there is no clear time when iterations are done, which can cause

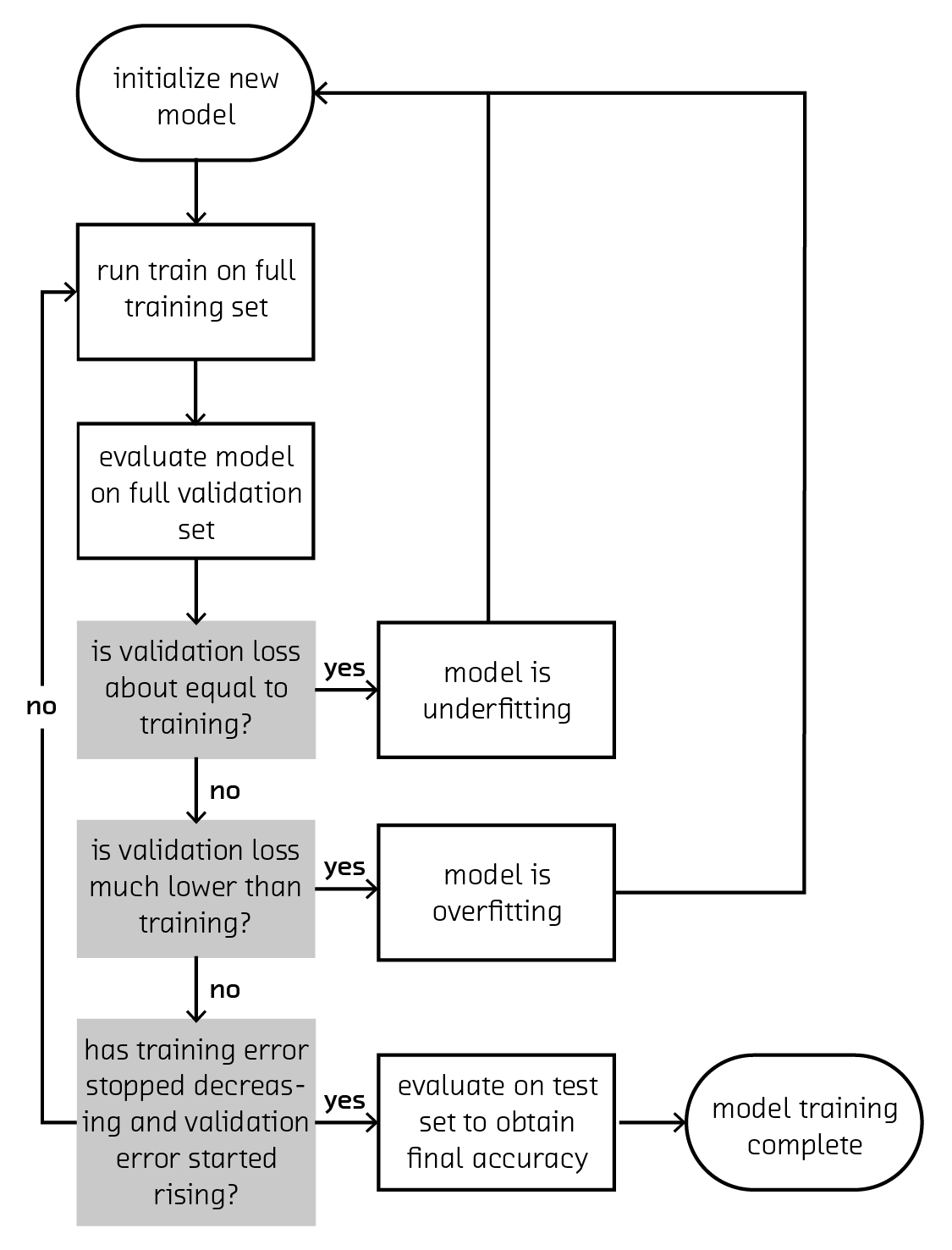

many problems. To address this, we diagnose the network using cross-validation.

Specifically, the dataset should be split into two parts, the training set and

the validation set (generally the training set is 80% and the validation set is 20%

of the data). We use the validation set to calculate an error (also called the

loss), which is compared to the error on the training set. Comparing these two

values gives a good idea of how well the model will work on data in the wild,

since it never learned directly from this validation data. Furthermore, we can

use the amount of error in the training versus the validation sets to diagnose

whether training is complete, whether we need more data, or whether the model is

too complex or too simple (see <

That backpropagation is an iterative algorithm that starts with essentially random weights can also lead to suboptimal network results. We may have terrible luck and initialize our network close to a local minimum (i.e., backpropagation may yield a solution that is better than our initial guess of weights, but nowhere close to a globally “best” solution). To correct this, most researchers train many networks with the same hyperparameters but with different randomly initialized weights, selecting the model with the best result.

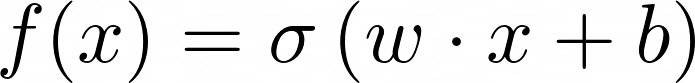

Regularization

Even with our current tools for building neural networks, we still face the basic threat that all machine learning entails: overfitting. That is, even with a complicated architecture, we risk making a neural network that only understands our current dataset. To make sure we don’t teach our pony one trick, we need to create a robust regularization scheme.

Regularization is a set of methods to ensure the model better generalizes from the dataset used to train it. This may not seem as glamorous as finding new neural architectures, but it is just as important since it allows us to train simpler models to learn more complicated classifications without overfitting. Regularization can be applied to a wide range of models, allowing them to perform better and be more robust.[3]

The first form of regularization that was used is L1 and L2 regularization,

collectively known as weight decay (see <[0.4, 0.6, 0.2], we could easily affect

the output vector from that layer using backpropagation; however, the

weights [0.4, 256.0, 0.2] would be almost unaffected by a similarly

backpropagated error. Small weights create a simpler, more powerful model.

A more recent, and very popular, form of regularization called dropout is widely

used. In this method, a random subset of neurons “drop out” of a forward and

backward pass during training. That is to say, with a dropout parameter of

0.2, during training only 80% of neurons are ever used for forward and

backward propagations (and the weight values are scaled accordingly to account

for the reduced number of neurons). As every neuron learns aspects of the

features necessary to do the classification, this means the decision-making

process is spread more evenly across nodes. Think of this as noise being added

to the input, making overfitting rarer since the network never sees the same

exact input twice.

Putting It All Together

In the feed-forward model, we transform the input vector by sending it through neurons that use weights and biases to compute intermediate values. Then we pass these values through a nonlinear activation function to see if the information moves forward. We use multiple layers to allow for more general feature extraction, since each layer gives our model complexity. Finally, we use the result from our output layer to calculate how wrong we were with a cost function. Backpropagation tells us how to adjust each neuron to improve our result, and we use regularization and dropout to generalize our results and prevent overfitting.

This may seem complex, but this background is sufficient to understand, evaluate, and engineer Deep Learning systems.

The feed-forward net is to neural networks what the margarita is to pizza: the foundation for further exploration. Most systems you’ll have encountered in the wild prior to recent innovations were feed-forward neural networks: systems for things like character recognition, stock market prediction, and fingerprint recognition. Having covered the basics, we can now start to take you from simple systems to complex, emergent ones.

Convolutional Neural Networks: Feed-Forward Nets for Images

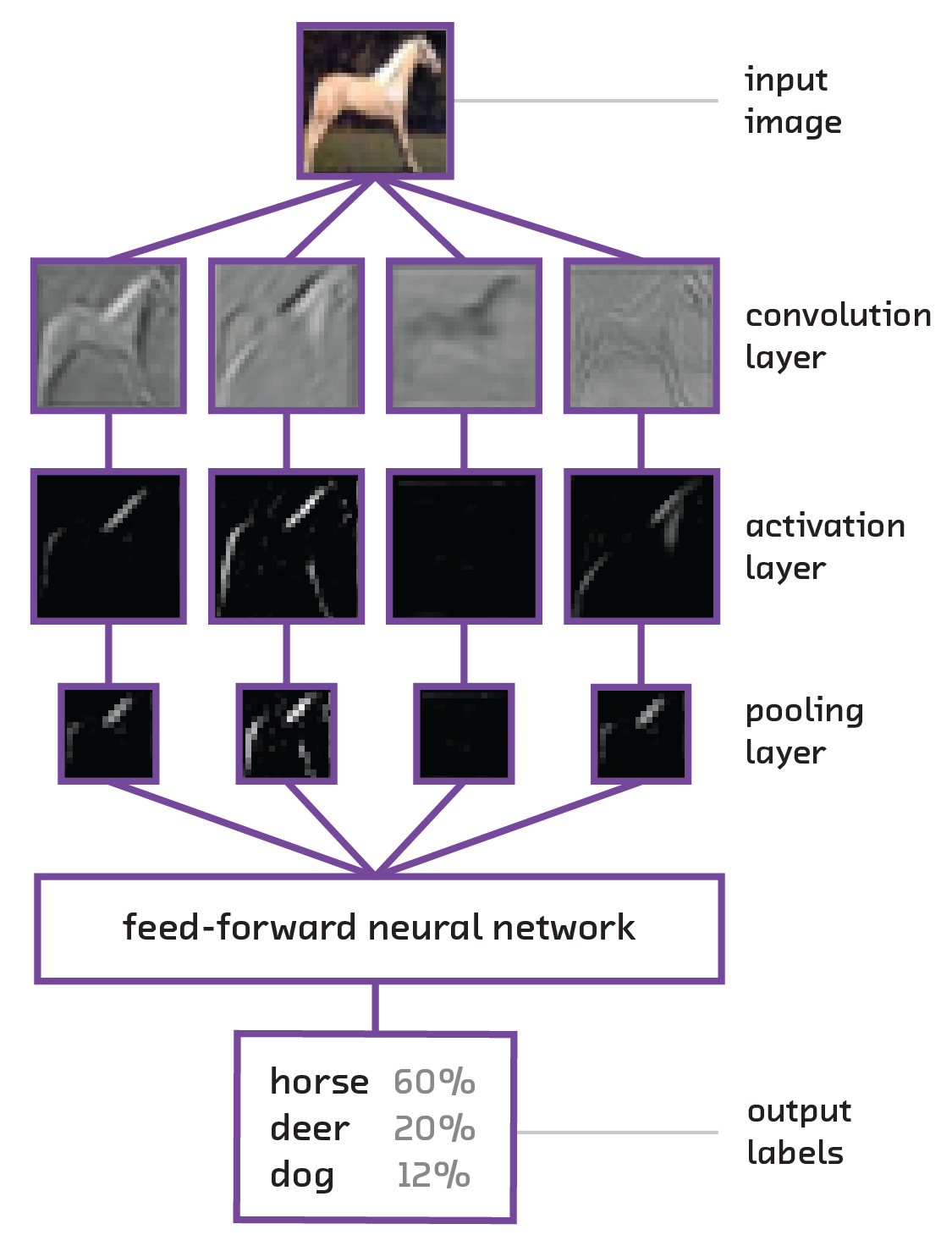

If Deep Learning ended with feed-forward neural networks, we would have trouble classifying images robustly. So far, our inputs have all been vectors; but images are spatial, intrinsically 2D structures (3D if we include color). What is needed is a neural network that can maintain this spatial structure and still be trained with backpropagation. Luckily, this is exactly how convolutional neural networks work [4].

These networks are quite new. They were first explored in 1980, and

gained wide spread adoption in 1998 in the form of the LeNet (pioneered by Yann LeCun) with their

ability to do hand-written digit recognition. However, in 2003 they were

generalized and simplified

into a form which allowed them to solve much more complex problems.

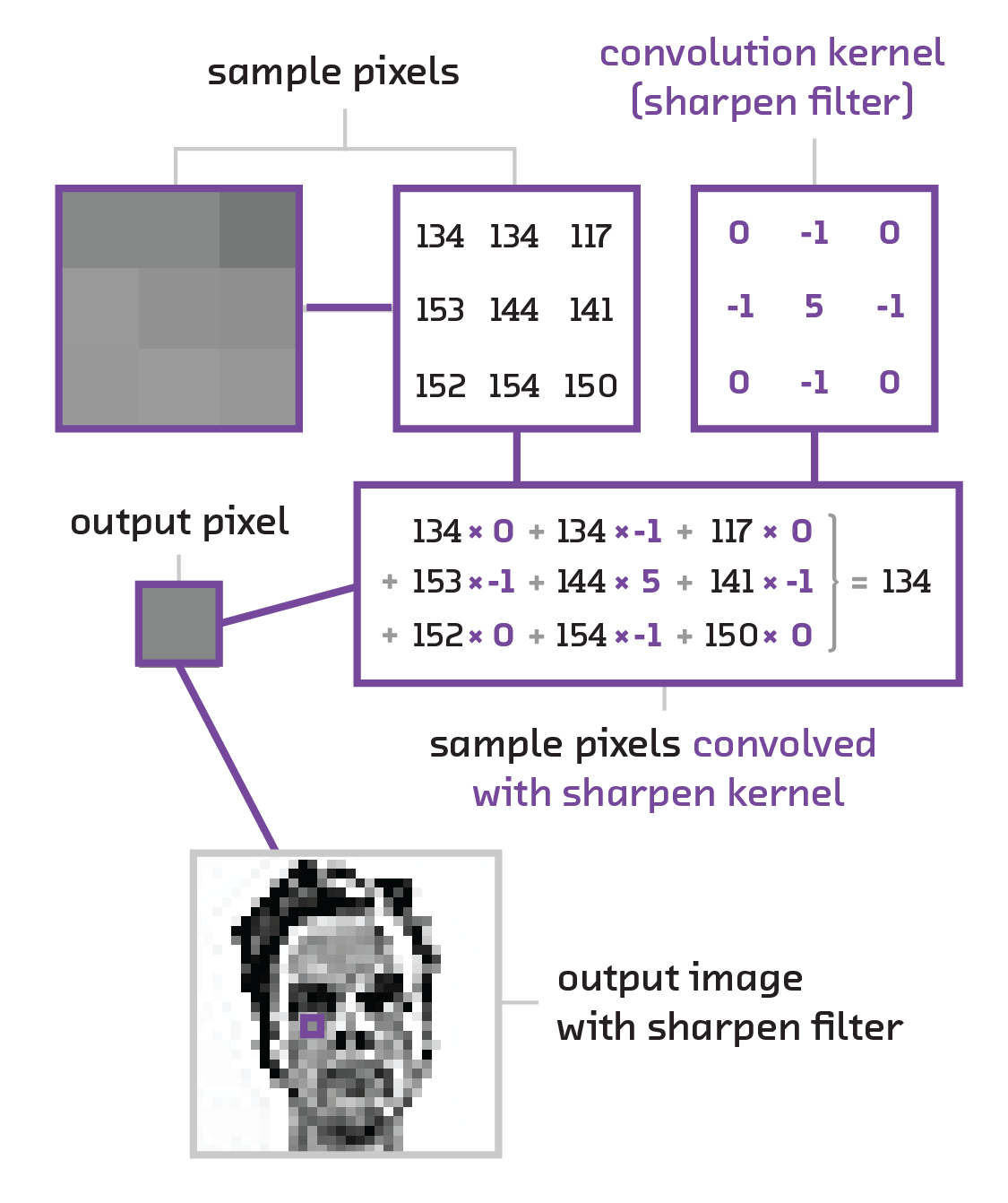

As the name states, instead of operating on the matrix multiplication between the input and a set of weights, a convolutional neural network works on a convolution [5] between the input and a kernel (see below). The kernel is simply a small matrix (generally between 3 x 3 and 8 x 8) that extracts certain spatial features from the image. This sort of technique is used all the time in classical computer image processing. For example, the Sobel operator that is often used for edge detection in images works by convolving a specific set of 3 x 3 matrices with the image. With a convolutional net, we aim to learn the values for these kernels instead of for the full sets of weights.

The full structure of a convolutional neural network is a stacking of several convolutional layers and then several layers of a classic feed-forward neural network. The convolutional layers can be thought of as prepping the data so that the feed-forward layers can take advantage of the spatial structure of the input image. This structure highlights the flexibility of neural networks in general – we can choose to have the convolutional layers feed into a feed-forward neural network or any other type of neural network, depending on what the problem demands. (In 8 The Future we talk about alternate setups used to solve different types of problems, such as captioning images or training a computer to play video games.)

When defining a layer of a convolutional neural network, we specify the number of kernels, how big each kernel is, and the “stride” (step size, or number of spaces moved between each kernel evaluation). This layer will output a new “image” that has a different dimensionality from the input, with spatial features extracted. For example, if our input image was 227 x 227 x 3 (i.e., 227 x 227 pixels over 3 colors) and we used 96 kernels of size 11 x 11 with a stride of 4, the output of this layer would have the dimensions 55 x 55 x 96. These, in fact, are the exact layer parameters for the ImageNet model. In this formulation, each of the 55 x 55 layers is considered a depth slice.

It is important to note that while there are incredibly high numbers of inputs and outputs, there are only 96 x 11 x 11 x 3 weights across the entire layer. With the addition of the 96 biases, this is a total of 34,944 parameters – substantially fewer than the 44,892,219,387 parameters we would have had in a normal feed-forward neural network! This is why convolutional neural networks ushered in neural image processing. It is amazing how much processing can be done with a convolutional neural network given the relatively small number of parameters. The convolutional example above uses the same number of parameters as two feed-forward layers of 186 neurons each, which is quite small for any problem of real interest!

A method known as max pooling, which combines

the values of pixels close to each other, further reduces the number of

parameters in convolutional networks. This can happen on the input or for any

depth slice. Max pooling defines a region, typically 2 x 2- or 3 x 3-pixel

blocks, and only takes the maximum value in any block of that size. This is

similar to downsampling or resizing an image: it both reduces the dimensionality

of the data and adds a form of translational invariance that safeguards the

neural network when the scene is shifted in one direction or another (i.e., if

most pictures of cats have the cat in the center of the image, but we still want

it to perform well if the cat is on the side of the image). We can see the

result of these dimensional reductions in <

In practice, convolutional networks are used as a feature extractor for classic feed-forward networks. A convolutional neural network takes an image as input and processes it through many layers of convolutions. Once the image has been treated through enough layers, the output of the final convolutional layer is reshaped into a vector and fed into what is called a “fully connected” layer. This may seem like exactly what we were avoiding – once we reshape the data into a vector, we lose the spatial relationships we were trying so hard to maintain. The intuition, however, is that after the image has been passed through multiple convolution steps, the neurons will have been encoded with all the relevant spatial features. For example, if the image had a diagonal edge, there would be some neurons will have encoded that pattern, and therefore rendering the actual spatial data at that point is redundant.

Once in the fully connected layer, we can simply classify as before. In fact, the fully connected region of the network is where we actually start making the associations between the spatial features seen in the image and the actual classifications that we require the network to make. One might think of the convolutional steps as simply learning to look at the picture the right way (i.e., looking for specific color contrasts, edges, shapes, etc.), while the fully connected layers correlate the appearance of particular features with classification classes. In fact, the spatial features seen by the convolutional layers are often robust enough that they can be kept static when training a network on multiple tasks, while only the weights for the fully connected layers are changed from problem to problem in a method called fine-tuning.

Fine-tuning has raised the potential of neural networks as common components within a service. It allows us to use them in transfer tasks: when we take part of a pretrained model and port it over to a different task by fine-tuning the new layers. For convolutional networks, this means we can continue to improve models that encode spatial features while utilizing them in diverse classification problems. Task transfer allows us to iterate on specific pieces of our architecture while modularly building systems for new problems. In many cases, this saves time and computational cost because parts of a robust neural network can be connected into new layers trained around a particular problem.

What Is Deep?

Having taken the long walk with us through the building blocks of this technology, you may still be wondering, “What exactly is this Deep Learning thing?” Since you’re likely to hear this term trumpeted by many new companies and the media in the near future, we want to make sure it’s given some context – or should we say, depth.

As previously shown, layers in a neural network can be stacked as desired, at the cost of computation and the requirement for more data. However, with each new layer, the neural network is able to create more robust internal representations of the data. This can allow a deep neural network to tease out very subtle features from the data to accurately classify inputs that would cause other models to fail.

It is important to realize, however, that when we speak of “deep” learning, we are not simply referring to the number of layers. While there is no concrete definition of what “deep” means in this context, it is generally accepted that the number of causal connections each neuron has is a more accurate representation of the depth. That is to say, if a particular neuron’s output can affect a large number of other neurons through many possible paths, that network is considered deep. [6] This focus on the number of possible causal connections allows us to think of powerful but small architectures as deep. For example, simple two-layer recurrent neural networks may not have many layers, but the robustness of the neural connections creates many causal links, resulting in a network that can be seen as very deep.

What Neural Networks Are and Are Not

The very term “neural network” invokes cybernetic imagery. We easily fall into the old sci-fi fantasy of thinking, conscious machinery. While seductive, this account of what a neural network is doing is practically entirely false. Neural networks are structurally and functionally dissimilar from real brains, even if the brain’s neural connectivity inspired the computational infrastructure.

In this section, we’ll develop intuition around what tasks neural networks succeed at and debunk the mythology around these “artificial brains.” With this knowledge, we can evaluate why they aren’t quite brains, but still do an impressive job of modeling highly complex functions that can classify large and diverse data – and, importantly, learn how to identify when claims around neural networks are being overstated.

Interpreting a Neural Network

In Section 2, we covered the basic architecture and computational techniques used to design and train neural networks. How computer scientists often interpret these systems is that we are creating one glorified feature extractor. That is, we comprehend our data as having discrete features that, once teased out, tell us how that data should be treated given some classification or prediction goal. This is the assumption of separability that essentially means we believe our data’s features can be identified, separated, and bounded so as to make robust class distinctions. Neural networks are tools for extracting out these features and, in turn, making classification decisions.

You may remember that with convolutional neural networks (which are used for object recognition), we use kernels to encode features from smaller sections of our images (e.g., detecting an edge). With these features encoded, we can then use further layers to separate our feature space. And this is the essence of even the most complicated, multilayered neural networks: layer by layer we are transforming our data, first to encode the data’s features and then to filter them based on how our model has “learned” to categorize those features. Because these transformations are nonlinear and we do not actually know what features each layer is encoding, any further interpretation requires a very fine, technical inspection of the model in action.

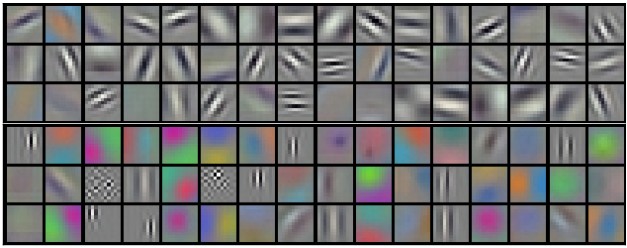

Due to this complexity, many researchers have worked on visual techniques that give them insight into what is happening at each layer. [7] There are many people who specialize in gaining practical results (e.g., tuning hyperparameters, adding/subtracting layers), but this high-level description is about as close as we get to interpreting what these systems are actually doing.

Some of you may have seen the images generated by Google’s Deep Dream software, which incepts images with psychedelic-looking dogs, buildings, etc. [8] This technique was designed to gain insight into what the neural network is encoding at a particular layer. The tactic is to take an already trained network but only evaluate it up to the layer we want to inspect. Then, taking a separate image that we want to “incept” into our neural network, we run our inception image up to the layer we want to inspect and get its values. Then we run our backpropagation algorithm, except instead of minimizing our usual cost function, we minimize against the values found for the incepted image. Finally, we backpropagate all the way into the image, treating it as a modifiable layer rather than fixed input data, adjusting its pixel values to get a visual representation of what it’s trying to encode.

While this method may seem like a toy and nothing else, it provided us with the

first insight into the complexity of the features that a convolutional neural

network was using to do its classification. Before, the only way to see what

features were being extracted was to look at the activation maps (see

<

Google’s method is a taste of how one may be creative in figuring out what a neural network is encoding. It is only good for visual images, and tells us a limited amount, but it allows us a visual – and sometimes creepy – insight into what the weights and biases of a particular layer mean.

Limitations

For someone who makes plans and decisions based on emerging technologies, it is crucial to have a discerning eye for what is achievable and what is far-fetched. Having now seen the basic concepts behind neural networks and a functional interpretation, you may already have some inkling as to why these systems are not “strong AI.” [9] While neural networks are powerful, the brain-like vocabulary is misleading. Obviously, they are materially different, but these systems are not “brains” from a functional standpoint either.

To begin with, brains have plastic connections – neuron A may not always be connected to neuron B at all times – whereas in neural networks these connections are rigidly defined. Further, in brains, everything is a function: even activation functions are functions since neuronal activations are primed by many conditions determined by the local chemical state. Neural inhibitors and transmitters modify individual synapses, changing both the firing potential and the signals transmitted. Rather than accepting input vectors of a specific shape, brains adapt to multimodal inputs that are combined internally.

All of these functional differences must be taken seriously before we go too far in comparing neural networks to human intelligence. These descriptions tell us what’s different, yet only go so far in characterizing what they amount to in the way of limitations. Many of the tasks that we are eager to see AI accomplish involve cognitive criteria for success. Understanding this distinction between cognitive and computational involves clarifying the difference between learning and thinking, as well as processing and integrating information.

As was elaborated in Section 2, neural networks have a capacity for learning via parameter adjustment. Recall that this learning is the result of minimizing a single objective function defining our task. The existence of the objective function makes learning in neural networks quite different from in brains. Why we will distinguish the role of thinking to understand this contrast is because the process of thinking involves switching and integrating contexts – something neural networks are not yet able to do. Here, learning is the feedback system that improves practical results on a task, whereas thinking is the process that reflects on the task, our goal orientation toward it, the varying approaches we may take, etc. In neural network terms, this would imply objective functions constantly morphing (task redefinition) and the shape of input vectors changing (context adjustment) as needed. Consider being taught a word, but then asking your teacher for a visual demonstration to go along with the syntactic definition – both the visual and auditory contexts aid in your learning. Neural networks do not have this flexibility.

An implication of how neural networks are currently designed is that they are processing systems rather than integrating systems. That is, they do not know or care about outside information that may be pertinent to their task, or about whether their goal is appropriate. They merely act on particular input shapes, achieving particular output shapes using rigidly defined computations. An integrating system requires a higher-order functioning that cannot be reduced to any particular processing task, which itself can include/exclude specific information and adjust the task. It is integration that is needed for cognitive tasks and that must be worked on before AI encroaches on the kind of intelligence we dream about in our sci-fi fantasies – strong AI.

With that said, we still find neural networks extremely useful because they allow us to take a diversity of inputs and model arbitrarily complex functions. Given that developing functions for mapping intricate inputs into simple outputs is an extremely difficult task, neural networks offer technologists an incredible service by being able to approximate any continuous function capable of being written down. We call this the universality of neural networks, and it will be touched on in the next section.

Common Misconceptions

Despite the limitations characterized above, neural networks continue to be in the press with many fantastical articles written about them. It seems that every week a new neural network is devised that reportedly gets closer and closer to being able to read your thoughts or interact with you in a deeply personal way. However, as you now know, these claims are far from the truth. While neural networks are indeed advancing, we are at a point where either vast improvements must be made to the algorithms or we must devise exponentially bigger computers. [10]

This is because current neural networks are quite limited in the generality they can obtain. We are only now reaching levels where image classification can be done at an accuracy better than that achievable by humans; however, that is only for a very focused set of classification labels on a very curated dataset (known as the ImageNet dataset, which is used annually in image recognition competitions).

It has been shown, however, that neural networks do very well on transfer tasks, where the model has been trained on one problem and is then applied to a different problem with minimal retraining. However, just as before, these tasks must have quantifiable objectives, making them very limited in scope – a limitation currently affected by the sizes of both the models and the datasets we have available to train on.

Furthermore, an aspect of neural networks that is often left undiscussed in public is how the exact formulation of the data determines our effectiveness. We may feel tempted to believe that if we had a large enough neural network and all of Facebook’s data, we could predict users’ preferences given certain online actions. This misconception stems from the nature of training on cost functions, since neural networks currently only show their real utility for supervised learning problems where the correct results are known for the given training set. That is, to train the neural network we must have a labeled dataset that teaches the model correct relationships between input and desired output.

This problem with datasets can also lead to many subtleties where a dataset does indeed exist, but is not robust enough to fully describe the problem. For example, if we had images of news anchors as they were talking about different stories, and each image was labeled with the topic of the story the person was discussing, would we be able to later determine the topic of a story given an image of the news anchor? This might be possible in certain cases – for example, if the topic was weather we could probably use the existence of a weather map in the background as a giveaway. However, for many other topics there are no cues that could be used to determine what is being talked about, since we are simply using the wrong data – we are using images of the anchor when what we really want is audio or a transcription of what is being discussed! This type of problem is quite prevalent, where it seems our dataset has the right association, but it is missing the correct context in order to find a reasonable solution to the problem.

In the end, neural networks are not magical devices that can solve any problem they are given. Instead, they are a way of training incredibly large models to solve very high dimensional problems. So as you consider whether or not they are right given your problem, or whether to believe someone else’s claim to be solving a problem using a neural network, here’s a list of questions to ask yourself:

- Can you quantitatively describe the features of your problem space?

- Does the problem always require the same information to solve?

- If you were to give this task to a group of humans, would you expect consensus?

- Is the problem’s solution context dependent? If so, does the data cover that context?

- Can you verify the correctness of a given output?

- Does the neural network claim to know you better than you know yourself?

Are Neural Networks Right for You?

There are many things to consider when deciding whether to use neural networks in your system. While very powerful, they can also be very resource intensive to implement and to deploy. As a result, it is important to make sure that other options have already been exhausted. Trying simpler methods on a given problem will at the very least serve as a good benchmark when validating the effectiveness of the neural model. However, for images, non-neural methods are quite limited unless auxiliary data is available (for example, quality-controlled user-generated tags with standard clustering and classification algorithms could be a good first-pass solution).

Picking a Good Model

If a neural model seems like the only solution, one of the most important considerations when starting is whether a model (particularly a trained model) that solves the problem already exists. These pretrained models have the advantage of being ready to use immediately for testing and already having a predefined accuracy on a given dataset, which may be good enough to solve the problem at hand. Getting to those accuracies often involves tuning many parameters of the model, called hyperparameters; this can be quite tedious and time-consuming, and requires expert-level understanding of how the model works in order to do correctly. Even with all other hurdles taken care of, simply figuring out a good set of hyperparameter values for the model can determine whether the resulting model will be usable or not.

There are many pretrained models out there to use. Caffe, for example, provides

a model zoo where people can share models in a standardized way so they can be

easily used. One that is of particular interest is the googlenet model, which

comes with an unrestricted license and does ImageNet classification, out of the

box, with 68.7% accuracy for predicting the correct tag and 89% accuracy for

having the correct tag in the top five results. While some of the models in the

zoo are released under a commercial-friendly license, many are distributed using

a non-commercial license (normally as a result of restrictions on the underlying

dataset). However, these pretrained models can at least serve as a good basis

for testing to see if the particular approach is valid for your problem before

going ahead and training the same model on your own custom data.

Notable Models in the Model Zoo

- Places-CNN: Trained on images of various locations and of various objects

- FCN-Xs: Segments images to find the locations of objects in images

- Salient Object Subitizing: Finds the number of objects in an image

- Binary Hash Codes: Generates semantic image hash codes for fast “similar image” retrieval

- Age/Gender: Predicts the age and gender of a person through an image

- Car Identification: Identifies the model of a car

Fine Tuning / Transfer Learning

Once a pretrained model is found, it can either be used outright or run through a process called fine-tuning [11]. In fine-tuning, a pretrained model is used to initialize the values for a new model that is trained on new data. This process shows how robust neural networks can be – a model trained for one purpose can very easily be converted to solving another problem. For example, a model used to classify images can be fine-tuned in order to rank Flickr images based on their style [12]. A benefit of this is that the pretrained model already has some abilities in recognizing images, or whatever task it was intended for, which means the fine-tuning training is more focused and can be done with much less data. For applications where a pretrained model that solves the given problem cannot be found, and an adequately sized dataset is not available, it may be necessary to find a “good enough” pretrained model and use fine-tuning to repurpose it for the given problem. As mentioned in the description of <<convnet,convolutional neural networks>>, often convolutional layers are reused since their ability to extract objects from a scene are not necessarily greatly affected when changing the downstream classification task at hand. [13]

Datasets and Training

One of the biggest difficulties with training your own neural network is finding a large enough dataset that fits the problem space. While deep neural networks can be trained to perform a wide variety of tasks, as more layers are added (and thus the total number of parameters of the model increases), the amount of data necessary for training also increases. As a result, when deciding whether it is possible to train your own neural network to solve a problem, you must consider two main questions: “Do I have enough data?” and “Is my data clean and robust enough?”

Unfortunately, there are no easy ways to know a priori whether your dataset is large enough for the given problem. Each problem introduces its own subtleties that the neural network must learn to figure out – the subtler the differences between the example data, the more examples are necessary before the model can figure them out.

A good rule of thumb is to compare the results of your cost function between the training and validation sets, also known as training loss and validation loss. Commonly we aim at having a training loss that is a bit higher than the validation loss when performing backpropagation. If the training loss is about the same as the validation loss, then your model is underfitting, which means you should increase the complexity of the model, adding layers or connections. If the training loss is much lower than the validation loss, then your model may be overfitting. Solutions to this include decreasing the model’s complexity or increasing the dataset size (synthetically or otherwise).

Furthermore, when training a convolutional neural network, it is useful to look at the actual kernels to gauge the performance of the network while it’s being trained. We expect the kernels to be smooth and not look noisy. The smoothness of the resulting kernels is a good measure of how well the network has converged on a set of features. Noisy kernels could result from noisy data, insufficient data, an overly complex model, or insufficient training time.

One common way to synthetically increase the dataset size in an image-based task is through multisampling, where each image is cropped in multiple ways and flipped horizontally and vertically. Sometimes, noise is even introduced into the input every time a piece of data is being shown to the network. This method is recommended in every application, not only because it increases the dataset size, but also because it makes the resulting network more robust for rotated and zoomed-in images. Alternatively, the dropout method discussed in Section 2 (a type of regularization) can be used. It is generally advisable to always use the dropout method with a small dropout factor to prevent overfitting whenever there is limited data available.

However, if tweaking the model complexity, dataset size, or regularization parameters doesn’t fix the validation and training losses, then your dataset may not be robust enough. This can happen if there are many examples of one category but not many of another (say, 1,000 images of cats but only 5 of scissors), or if there is a general asymmetry in the data that allows the neural network to learn auxiliary features. A common example of this in image training is the picture’s exposure and saturation. If all pictures of cats are done using professional photography equipment and pictures of scissors are taken on phones, the network will simply learn to classify high-quality images as cats. This problem shows itself quite often in the use of pretrained networks on social media data – many pretrained networks use stock photography as their training set since it is highly available, however there are vast differences between the quality and subject of stock pictures and pictures found on social media websites. One solution is normalizing images, a procedure where the mean pixel value of the entire dataset is subtracted from each image in order to deal with saturation and color issues. However, in cases where the dataset the model is being applied to differs drastically from the training set, it may be necessary to fine-tune the model, or start from scratch.

Testing What You’ve Made

Finally, it is important to understand the model you have created or intend to use. Even though neural networks are non-interpretable models (meaning we cannot gain too much insight into the internals of how the model is making the decisions it is), we can at least understand the domain that the model is applicable for by looking at the training set and having a robust enough test set.

For example, if we create a network that can estimate the amount of damage done to a region by a natural disaster using satellite imagery, what will the model say for regions that were completely unaffected? Is it biased to specific architectural or geographical features because of a bias in the dataset? Or maybe those biases simply emerged because the model was not robust enough to extract deeper features. Having a holdout sample of the dataset that is only used once the model is fully trained can be invaluable in understanding these sorts of behaviors; however, a careful analysis of the dataset itself is important.

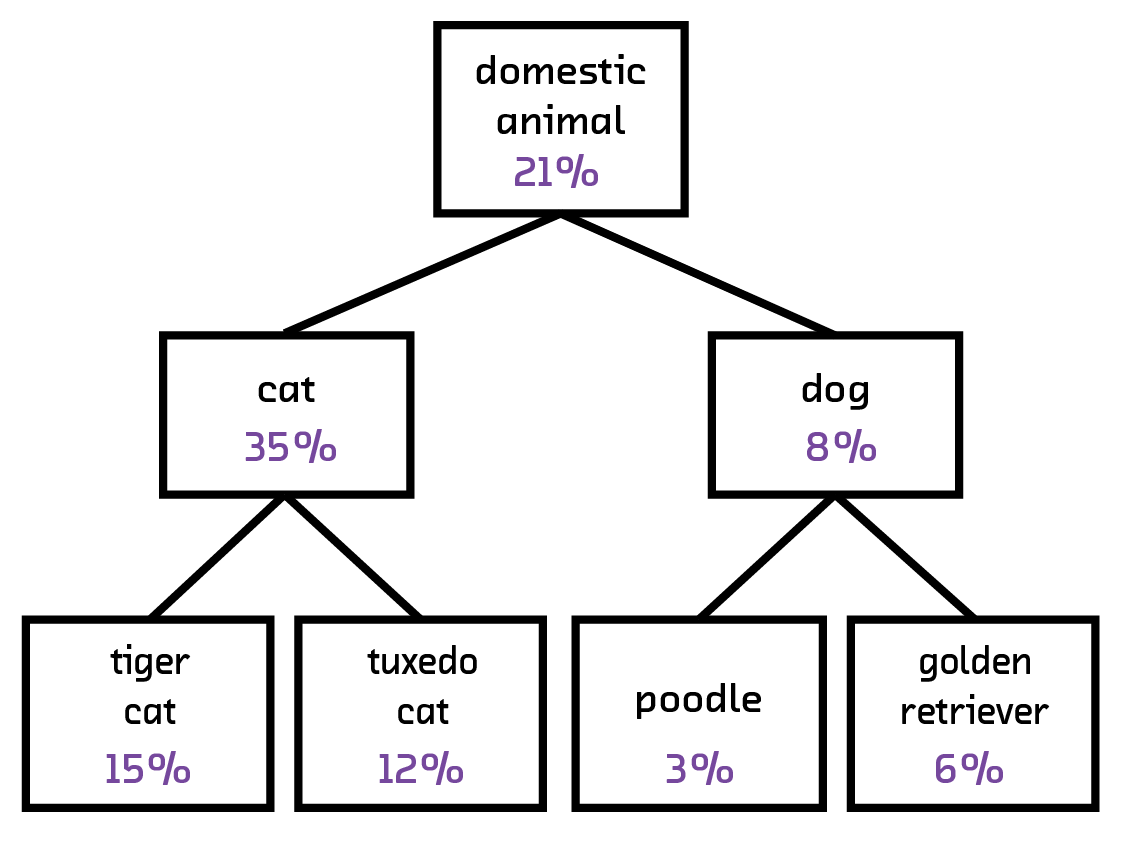

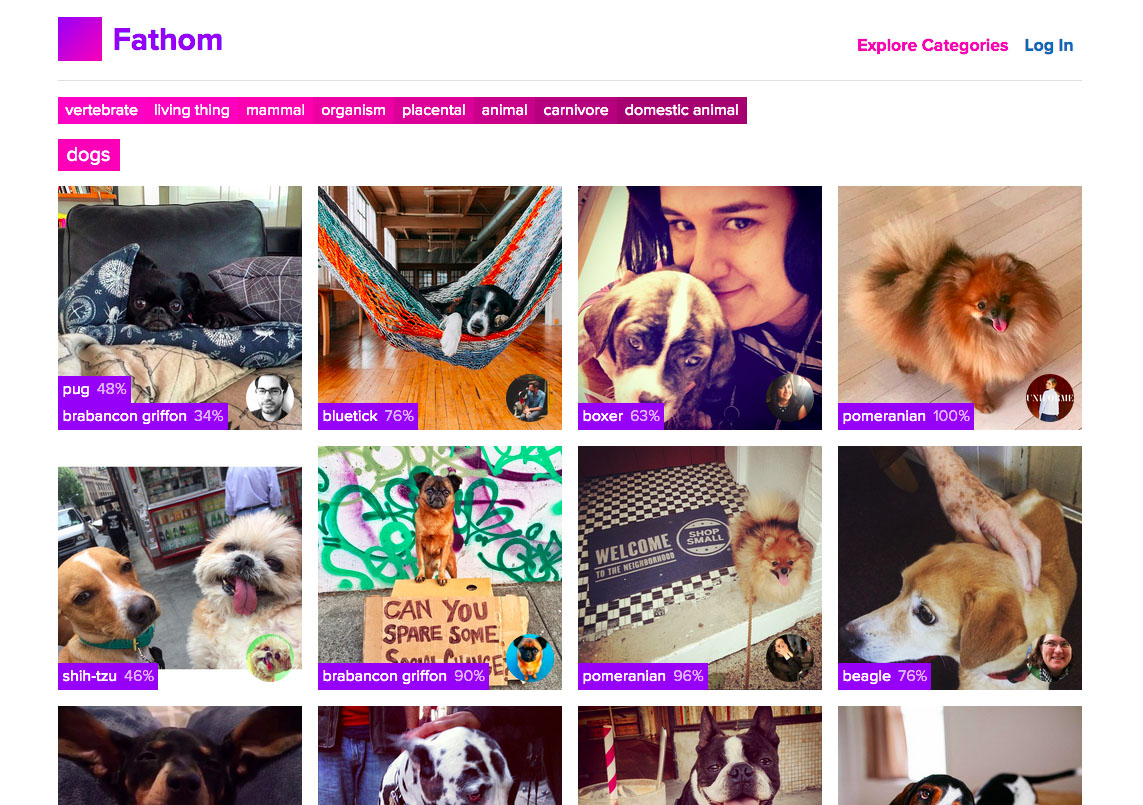

Furthermore, consideration must be given to cases where the neural network fails to give a high-confidence result, or simply gives the wrong result entirely. Currently, accuracies of >85% at image processing tasks are considered cutting edge; however, this means that for any high-volume application many incorrect results are being given. Results from a neural network also come with confidences, so a threshold in quality should be recognized for the given task and downstream applications should have procedures for when no results match the given confidence level. Another route is to use the results of the neural network to inform further algorithms in a way that can potentially increase the confidence, or at least the usability, of the results. In our prototypes, we use a hierarchical clustering on the predicted labels in order to increase the usability of low-confidence results. This draws on the intuition that even if an image cannot be confidently classified as a cat, most of the labels with nonzero confidences will be under the WordNet label “animal,” and this is a sufficiently informative label to use in this case.

Timelines

Below are some suggested timelines for working with neural networks in different situations. It is important to note that these numbers are incredibly approximate and depend very much on the problem that is being solved. We describe a problem as a “solved problem” if there already exists a pretrained model that does the specified task.

| Situation | Research | Data Acquisition | Training | Testing |

|---|---|---|---|---|

| Pre-Trained Model Available | 1 week | days | N/A | 1 week |

| Similar to Pre-Trained Model | 1 week | 1 week | days | 1 week |

| Similar to Problem in a Paper | weeks | 1 week | 1 week | 1 week |

| New application of known model | weeks | 1 week | weeks | 1 week |

| New application and novel model | months | weeks | months | weeks |

Deploying

Deploying a neural network is relatively easy; however, it can be quite

expensive. The trained model is large – easily 500 MB for a single moderately

sized network. This means that git is no longer an adequate tool for

versioning and packaging the datafile, and other means should be used. In the

past, using filename-versioned models on Amazon’s S3 has worked quite well,

particularly when done with the S3 backend for git-annex.

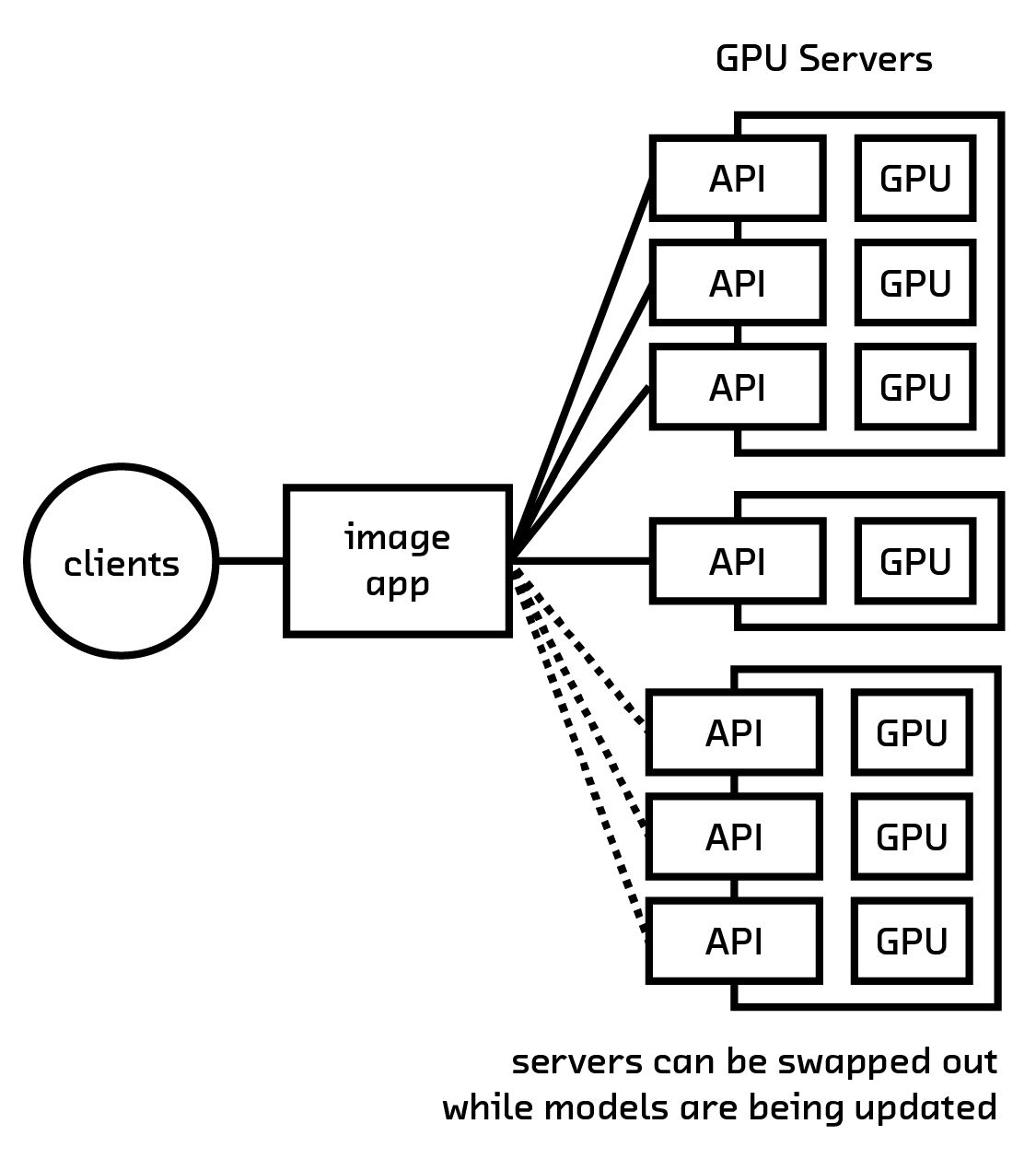

The machine that the model gets deployed on should have a GPU and be properly

configured to run mathematical operations on it. This can be difficult initially

to set up; however, once installed correctly, backups of the machine can easily

be swapped in and out [14].

The main complication comes from installing cuda if you are using an NVIDIA

device, as well as installing cuda-enabled math libraries such as theano,

caffe, cudnn, cufft, etc.

Once the model file is on a machine properly configured to use its GPU, using the neural model is quite the same as using any other model. A common route for facilitating a distributed cluster of these models is to wrap the network itself in a thin lightweight HTTP API and deploy it to a cluster of GPU-enabled machines. Then, any service in your ecosystem that must take advantage of the model’s power can pick a server in this cluster using a round-robin approach – new models can be uploaded and, one by one, computers in the neural network cluster can be updated without disrupting downstream services.

Having a policy for rolling out new models is quite important. Models generally need to be updated as their usage changes and new/different datasets become available that could increase their accuracy. It is very much suggested to instrument any service that uses the results of the neural network in order to obtain feedback data for use in future training (for example, asking the user “Was our result good?” or “What better response could we have provided?”).

Hardware

As described in 2 Neural Networks, while we think of these models as a series

of neurons connected to each other with various activation functions, the

results are actually computed with a series of vector operations. In fact, most

neural networks can be seen as simply taking the linear combination of vectors,

applying some nonlinear function (a popular choice is the tanh function), and

maybe taking a Fourier transform. These computations are perfectly suited for a

GPU, which has been optimized at the hardware level to perform linear algebra at

high speeds.

This is why NVIDIA has been very strongly pushing for using its GPUs for general mathematics as opposed to simply gaming. They have even gone as far as creating very specialized math libraries that optimize these linear algebra operations on their devices and, in recent months, developing specialized neural network hardware for their next-generation, computation-specific GPUs.

These considerations have gone from being useful for the academic working on

these problems to necessary for anyone working with neural networks – our

models are getting more and more complex, and the CPU is no longer adequate for

training or evaluating them. As a result, infrastructure using neural models

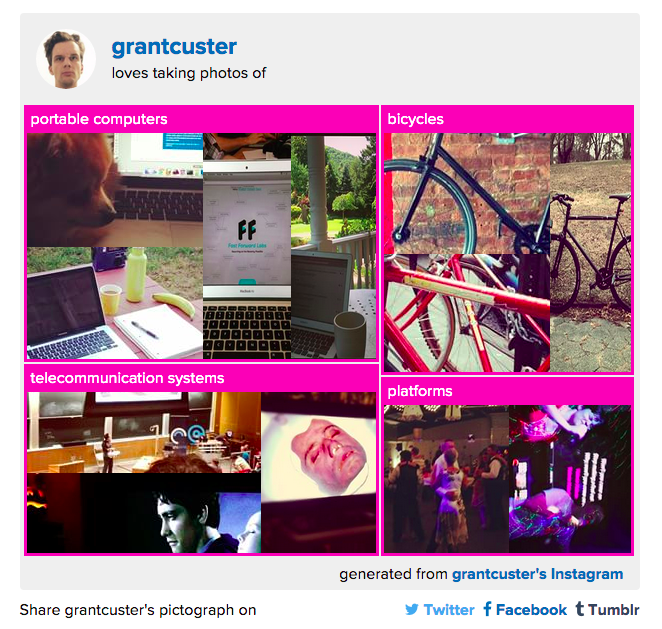

must have GPUs in order to function at acceptable speeds. When working on

Pictograph, we found that we could perform a single image classification in

about 6 seconds on the CPU, vs. 300 ms on the GPU (using the g2.2xlarge AWS

instance). Furthermore, the operations scale very well on a GPU (often

incurring almost no overhead if multiple images are classified together).

Deep Learning in Industry Today

Neural networks have been deployed in the wild for years, but new progress in Deep Learning has enabled a new generation of products at startups, established companies, and in the open source community.

In the startup community we’ve seen several companies emerge with the aim of making Deep Learning affordable and approachable for any product manager or engineer, as well as companies that apply Deep Learning to a specific problem domain, such as medicine or security. There’s been similar growth in the open source community, as companies and academics contribute code back to a variety of libraries and software packages.

Current applications of Deep Learning in industry include voice recognition, realtime translation, face recognition, video analysis, demographic identification, and tagging. We expect this flourishing of new development to continue over the next couple of years as new applications are discovered, more data assets appropriate to Deep Learning become available, and GPUs become even more affordable.

Commercial Uses of Deep Learning

The current enthusiasm for Deep Learning was spawned by a 2012 paper from Google [15] describing how researchers trained a neural network classifier on extremely large amounts of data to recognize cats (many examples used for illustration purposes in this paper is a nod to these researchers) Since then, big players like Google, Microsoft, Baidu, Facebook, and Yahoo! have been using Deep Learning for a variety of applications.

All of these companies have a natural advantage in creating Deep Learning systems – massive, high-quality datasets. They also have expertise in managing distributed computing infrastructure, giving them an advantage in applying Deep Learning techniques to real-world problems.

Google has published a follow-up to its 2012 paper [16], and is now using Deep Learning for realtime language translation, among other things. Its recent announcement of a realtime voice translation service on mobile phones is impressive both for the functionality [17] and for the application architecture – it runs on a standard smartphone.

Facebook formed FAIR,[18] the Facebook Artificial Intelligence Research Lab, in 2013, and hired Yann LeCun, one of the pioneers of Deep Learning research and a professor at NYU, as its director. FAIR has developed face recognition technology that has been deployed into an application that allows Facebook users to organize personal photos and share them with friends, [19] and contributed to numerous open source and academic projects. FAIR operates from Facebook’s New York Astor Place office, Menlo Park, CA, and Paris.

Baidu hired Andrew Ng, coauthor of the Google cat paper and Stanford professor, to lead their Deep Learning research lab out of Cupertino, CA. Baidu’s Minwa system is a purpose-built Deep Learning machine for object recognition.

Yahoo! is using image classification to automatically enrich the metadata on Flickr, its social photo site, by adding machine-generated tags to every photo. [20]

The relationships between researchers and companies are complex because these techniques emerged from a small and tight-knit research community that has recently exploded into relevance, with this obscure research area becoming one of the hottest areas for hiring among the Internet giants. Ideas that may have begun at one institution show up in applications developed by another, and people often move between institutions.

Deep Learning as a Service

If you are considering using Deep Learning but don’t plan to develop and train your own models, this section provides a guide to companies that offer services, generally through an API, that you can integrate into your products.

Clarifai

Clarifai [21] is a New York-based startup that uses Deep Learning to recognize objects in still images and video data. Clarifai’s models won the 2013 ImageNet competition.

Clarifai’s API allows users to submit an image or video, then returns a set of probability-weighted tags describing the objects recognized in the image or video and the system’s confidence in each tag. The API can also use an image input to find similar images. It runs quickly; it is capable of identifying objects in video streams faster than the video plays.

Founder Matthew Zeiler says, “Clarifai is building products that empower people to understand the massive amounts of information they are exposed to daily, making it easy to automatically organize, analyze, and share.”

Dextro

New York-based Dextro [22] offers a service that analyzes video content to extract a high-level categorization as well as a fine-grained visual timeline of the key concepts that appear onscreen. Dextro powers discovery, search, curation, and explicit content moderation for companies that work with large volumes of video.

Dextro’s models are built specifically for the sight, sound, and motion of video; its API accepts prerecorded videos or live streams, and outputs JSON objects of identified concepts and scenes with a timeline of their visibility and how prominent they were onscreen. Dextro can output in IAB Tier 2, Dextro’s own taxonomy, or any partner taxonomy.

Dextro offers a great service for any company that has an archive of video content or live streams that they would like to make searchable, discoverable, and useful.

David Luan, cofounder of Dextro, describes it as follows: “Dextro is focused on immediate customer use cases in real-world video, and excels at user-generated content. Our product roadmap is driven by our users; we automatically train large batches of new concepts every week based on what our partners ask for.”

CloudSight

CloudSight ^cloudsightapi.com/] is a Los Angeles-based company focusing on image recognition and visual search. Their primary offering is an API that accepts images and returns items that are identified in those images. They also use this API to power two apps of their own: TapTapSee, which helps visually impaired uses navigate using their mobile phones, and CamFind, which is a mobile visual search tool where users can submit images from their cameras as queries.

[[FIG-cloudsight]] .CloudSight recognizes a puppy image::figures/cloudsight_beagle.png[scaledwidth=“90%”]

MetaMind

MetaMind [23] offers products that use recursive neural networks and natural language processing for sentiment analysis, image object recognition (especially food), and semantic similarity analysis. MetaMind is located in Palo Alto, CA, and employs a number of former Stanford academics, including its founder and CEO, Richard Socher. It raised $8 million of capital in December 2014.

Dato

Dato [24] is a machine learning platform targeted at data scientists and engineers. It includes components that make it simple to integrate Deep Learning as well as other machine learning approaches to classification problems.

Dato doesn’t expose its models, so you are not always certain what code, exactly, is running. However, it offers fantastic speed compared to other benchmarks. It’s a good tool for data scientists and engineers who want a fast, reliable model-in-a-box.

LTU Technologies

LTU Technologies [25] is an image search company founded in 1999 that offers a suite of products that search for similar images and can detect differences in similar images. For example, searching a newspaper page from two different dates may reveal two advertisements that are similar except for the prices of the advertised product. LTU Technologies’ software is also geared toward brand and copyright tracking.

Nervana Systems

Nervana Systems [26] offers a cloud hardware/software solution for Deep Learning. Nervana also maintains an open source Deep Learning framework called Neon [27], which they describe as the fastest available. Notably, Neon includes hyperparameter optimization, which simplifies tuning of the model. Based in San Diego, Nervana was founded in April 2014 and quickly raised a $3.3 million Series A round of capital.

Skymind

Skymind [28] is a startup founded by Adam Gibson, who wrote the open source package DeepLearning4j [29]. Skymind provides support for enterprise companies that use DeepLearning4j in commercial applications. Skymind refers to itself as “The Red Hat of Open-Source AI for Enterprise.”

Unlike other startups listed here, Skymind does not provide service in the form of an API. Rather, it provides an entire general-purpose Deep Learning framework to be run with Hadoop or with Amazon Web Services Spark GPU systems. The framework itself is free; Skymind sells support to help deploy and maintain the framework. Skymind claims that DeepLearning4j is usable for voice-to-text tasks, object and face recognition, fraud detection, and text analysis.

Recently acquired startups

There have recently been many acquisitions of startups using Deep Learning technology. These include Skybox Imaging (acquired by Google), Jetpac (also acquired by Google), Lookflow (acquired by Yahoo!), AlchemyAPI (acquired by IBM), Madbits (acquired by Twitter), and Whetlab (also acquired by Twitter).

The challenges for independent Deep Learning startups include attracting the necessary talent (generally PhDs with expertise in neural networks and computer vision), accessing a suitably large and clean dataset, and finally figuring out a business model that leads to a reasonable monetization of their technology. Given these challenges, it’s not surprising that many small companies choose to continue their missions inside of larger organizations.

Startups Applying Deep Learning to a Specific Domain

Many entrepreneurs are excited about the potential of Deep Learning and are building products that have only recently become possible because of the accessibility of these techniques.

Healthcare

Healthcare applications push the boundaries of machine learning with large datasets and a tempting market with a real impact.

Enlitic [30], a San Francisco-based startup, uses Deep Learning for medical diagnostics, focusing on radiology data. Deep Learning is fitting for this application because a Deep Learning system can analyze more information than a doctor has ready access to, and may notice subtleties that are not clear to humans.

Atomwise [31] is a Canadian startup with offices in San Francisco that focuses on using Deep Learning to identify new drug candidates, and Deep Genomics [32] focuses on computational genomics and biomolecule generation.

For quite a long time, analysis of text records has been used to improve the patient experience and expand the knowledge available to doctors and nurses. However, that information is severely limited in scope and detail, simply as a result of being a burden to maintain for doctors. The ability to automatically encode the information in images such as x-ray and MRI scans into these records would provide a valuable additional source of data, without placing an added burden on the healthcare practitioners.

Security

The security domain offers subtle pattern recognition problems. Is this server failing? Is that one being compromised by unusual external activity? Deep learning is a fantastic mechanism for this sort of problem since it can be trained to understand what “normal operating conditions” are and alert when something deviates from it (regardless of whether the operators knew whether to intentionally put in a rule or heuristic for it). For this reason, neural networks have even been used as a backup system to monitor the safety of nuclear facilities. As a result, there has been progress in both academic research [33] and industry.

Canary [34] offers a home security device that alerts the customer’s mobile phone when unusual activity is detected in the home.

Lookout [35] offers an enterprise mobile predictive security solution that identifies potential problems before they manifest.

Marketing

Marketing is an obvious application domain for image analysis. Current media analytics products are generally limited to analyzing text data, and being able to extend that analysis to images has real value.

One product in this space comes from Ditto Labs [36], a startup based in Cambridge, MA. Their analytics product scans Twitter and Instagram photos for identifiable products and logos, and creates a feed for marketers showing brand analytics on social media. Marketers can track their own brands or competitors’ in a dashboard, or use an API.

Being able to identify the demographics of consumers is another interesting application that is already in the wild. Kairos [37] specializes in face recognition and analysis in images and video. Their SDK and APIs allow customers to identify individuals by face as well as estimate the demographics of unknown faces (gender, age, emotions, engagement). Finally, they offer a crowd analysis product that automates crowd analytics.

Data Enrichment

Document analysis firm Captricity [38] offers a product that helps established companies with information in paper format, such as insurance companies, convert this information into useful and accurate digital data. Captricity uses Deep Learning to identify the type of form represented in a scan – for example, a death certificate – and to recognize when form fields are empty.

Neural Network Patents

Our review of patents in the area does not reveal any dominant patent holders or limiting patents in the field. Rather, the field seems scattered with only a handful of patents issued to a small number of patentees. Given that the quantity and quality of data fed into the neural network is a major factor in the utility of the system, it is not surprising that there are few key patents in the area of Deep Learning.

There is one notable exception here: NEC Laboratories has received a number of Deep Learning patents, with many focused on visual analytical applications and some applications in text processing. As a few examples, NEC holds US Patent No. 8,345,984, covering methods for recognizing human actions in video images using convolutional neural nets; US Patent No. 8,582,807, dealing with gender classification and age estimation of humans in video images; US Patent No. 8,234,228, covering methods for unsupervised training of neural nets; and US Patent No. 8,892,488, focusing on document classification.

Open Source Neural Network Tools

The open source landscape for neural network tools is quite vast. This stems primarily from the fact that this is a very active academic field and is constantly changing. As a result, the only tools that can stay on top of the trends are those that are open source, have a thriving community, and cater to both users and the academics leading the advances in the field. We provide a survey here of both the tried and true libraries and the ones that the community is the most excited about at the time of writing; bear in mind, though, that the landscape is constantly changing. New libraries are sure to be introduced to the community, although they will mostly be specially built for a particular purpose or built on one of these technologies.

Theano

Theano is a Python-based open source tensor math library that couples tightly with

numpy and provides mechanisms for both CPU- and GPU-based computing. As a

result, theano is used quite heavily when building new types of neural networks

or building existing networks from scratch: code can be prototyped quickly

yet still run at GPU speeds.

While this library is extremely powerful, it is often overly general for those

just getting into the field of neural networks. However, since most other

Python neural network libraries use theano in the background, it still can be

an incredibly important tool to understand.

PyBrain2

PyBrain2 is another open-source Python neural network library focused on

simplicity. It is provided as a layer on top of theano, with a simple

pipeline for creating neural networks from already well understood pieces. As a

result, a convolutional neural network can be quickly and easily deployed using

this system.

However, pybrain2 does not completely hide the internals of the models you are

creating, and as a result it is used quite frequently in academic work when

experimenting with new network designs or training procedures. As a result, its

API is rapidly evolving, with new features being added regularly.

pybrain2 is a good choice for beginners to intermediate users who want

to potentially work with the internals of their network but don’t want to

reimplement existing work.

Keras

Keras, the final open-source Python library we will look at, is the simplest of all the options, providing an extremely simple and clean API to quickly and easily create networks with well understood layers. While it does provide mechanisms to see the internals of the network, the focus is on doing a small set of things correctly and efficiently.

As a result, many of the recent innovations in neural networks can be easily

implemented using keras. For example, an image captioning network can be

implemented and trained in just 25 lines of code!

[39] This makes keras the best choice for

Python developers looking to play with neural networks without spending too much

time working on the internals of the model.

caffe

Caffe is a C++ library created at UC Berkeley, released under the 2-clause BSD

license, with a Python library included. It is quite fully featured and is

generally used as a standalone program. In it, most of the common neural network

methods are already implemented and any customized model can be created by

simply creating a YAML configuration file. In addition, as mentioned previously,

many pretrained models are provided through Caffe’s “model zoo,”

[40] which makes this a good

option for those wishing to use preexisting models. Finally, caffe has been

accepted by NVIDIA as an official neural network library and as a result is

very optimized to run on their GPUs. [41]

While caffe is quite fast to use and to run, especially if using a pretrained

network, the API is quite unfriendly and installing it is known to be difficult.

The main source of pain with the API is understanding how custom data should be

represented for use with the standalone application. Furthermore, caffe's

documentation is quite lacking, which makes understanding how to create the YAML

configuration for a new network tedious. This lack of documentation carries